Week 09 / 10

Big project this one. For this assigment we had to build a machine; the poject was devided in two, mechanical design and machine design. We worked in teams for two weeks, and even it looks like a lot of time it just wasn't enough to finish it at the point that our team wished, but anyway, we make our machine work at the end!

No one in the team had previous experience on building a machine like this, but at the end we manage to make it work, it was a great experience and lots of learning.

Objectives

Make a machine.

Design and build the parts and operate it.

Automate your machine.

Weekly Progress >

Two week project:

Mechanical Design lecture with Neil Gershenfeld, you can watch it here.

Machine Design lecture with Neil Gershenfeld, you can watch it here.

For the fist week we had Caleb Harper talking about MIT Media Lab Open Agriculture Initiative.

We need a new kind of farmer to feed the 9 billion people of 2050, and these future farmers will have to grow food in drastically different conditions than their fore-farmers. While technology has allowed large companies to progress in agricultural science, their research and data is usually proprietary and is seldom shared with local producers.

The Open Agriculture Initiative (OpenAG) at the MIT Media Lab is opening the doors on agricultural data so that more people can learn to be farmers and improve our access to local, fresh, nutritious food. Learn how OpenAG equips users with open source hardware and software platforms to conduct networked experiments in controlled-environment agriculture (CEA) systems that let farmers experiment, innovate, hack, and make our future food systems more transparent, nutritious, and sustainable.

For the second week we had Jessi Baker talking about The Provenance Project.

As production became massive we have lost the track of where the things we consume come from. Tracking files are stored in cabinets or protected websites only accesible by few. The Provenance proposes to give free and transparent access to the world supply chain, starting now.

Assingment >

Our idea was to make a drawing machine that could use motion detection via Kinect to draw. So we decided first to start testing the motors, then mechanical design, then make the machine work (draw something) and finally work on the kinetics (the most difficult part of it).

We created a database of all the material we've collected during the process, you can have a look here.

Testing the motors

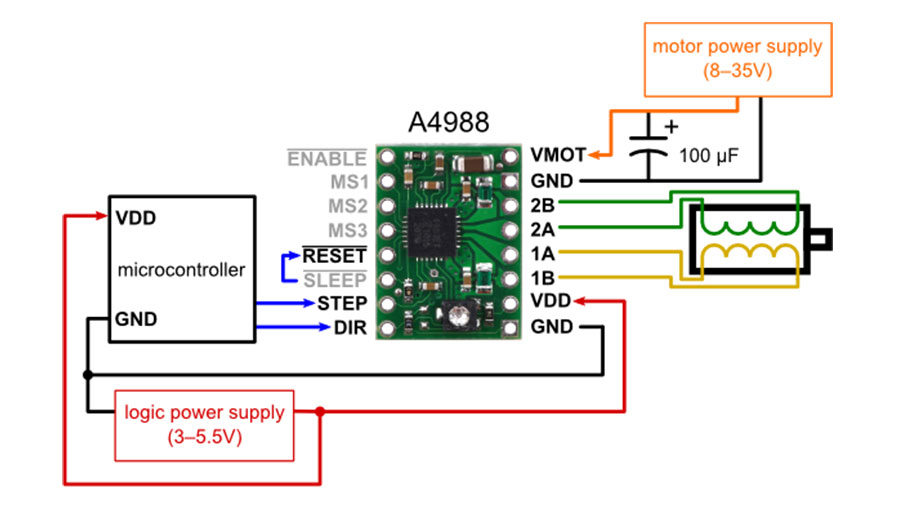

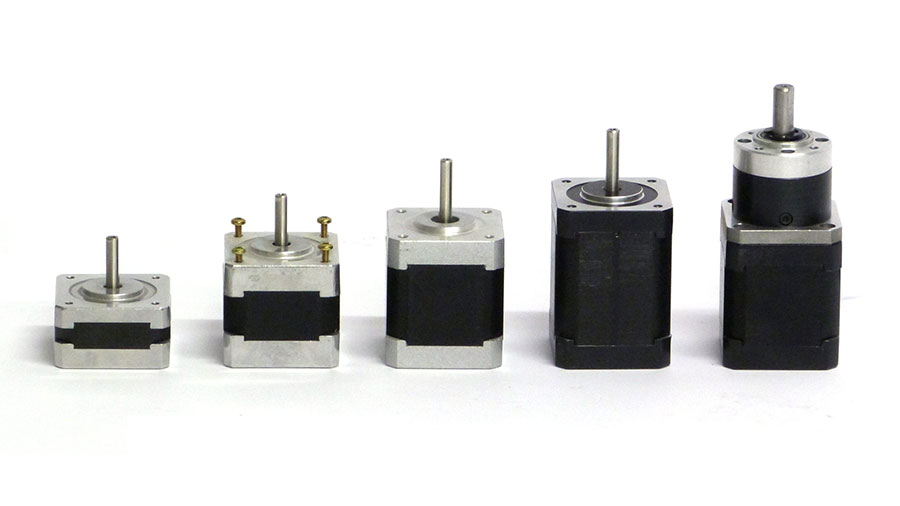

First we tried the stepper motors with Arduino, we decided to use this first to understand how the stepper motors work. By definition, stepper motors are "brushless DC motors" divided it's full rotation into a number of equal steps. Their main property is to convert a train of input pulses (mostly square wave pulses) into defined increment in the shaft position.

Stepper motors have toothed electromagnets inside a central gear made of iron, energized by an external driver or microcontroller. There are different types, and in the lab you'll find unipolar and bipolar.

I recommend you to take a look at "All About Stepper Motors" by Adafruit.

We used and modified Dejan Nedelkovski's Simple Stepper Motor Control Arduino code, to understand how steps work, you can get it here.

We tried the motors with two different controllers, first the Pololu A4988 and the DK Elektronics Motor Shield. We experimented with AFmotor library examples, and tried to undesrtood the difference between single steps, double steps, interleave steps and microsteps. You can check AFMotor from Adafruit's documentation here.

We also tried with this two codes by Janaki: Does the motor work code / Stepper code test

Mechanical Design

For the Mechanical Design we decided to divide in order to be able to finish on time, each one of us would be solving specific tasks.

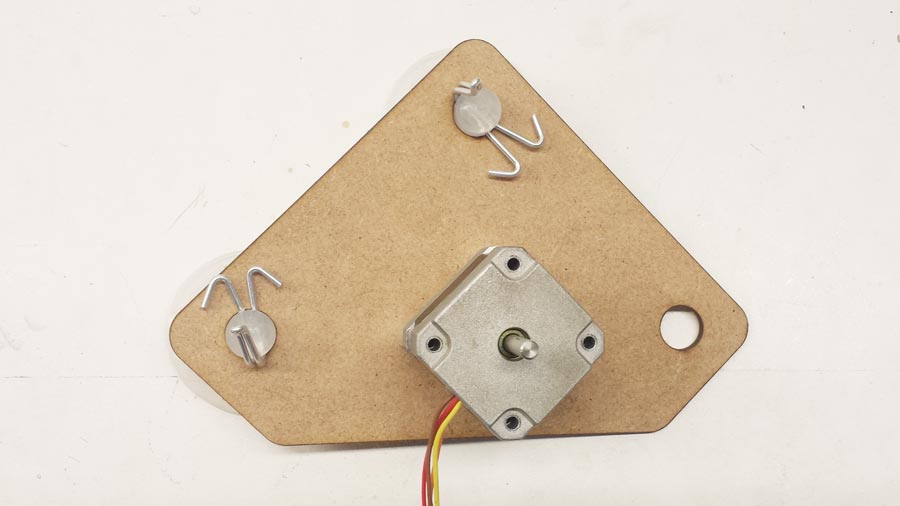

Caro designed the motor support using Solidworks and produced it in MDF using laser-cutting. We were thinking in different ways we could attach it to surfaces, and we thought about sucking cups, they are great because they give us the flexibility of being able to attach the machine to glass or any plain surface.

You can download the model here.

Janaki designed the spools, this piece would roll or unroll the nylon thread that we used to carry the pen holder. She based her design on last year's Drawbot Barcelona project. You can read her complete documentation about the spools here.

You can download the model here.

My task was to hack the cup holders because the original handles are curved in an angle that doesn't work well for holding the motors.

You can download the model here.

Cansu designed the pen holder. She tried different versions, at the end, we used a two handle version. The problem with the other versions was the 3D printing, we wanted to do it with this technique but it wasn't working well, we needed more time to optimize our model. In future versions it should work.

You can download the model here.

Citlali's part was to work on the Kinect.

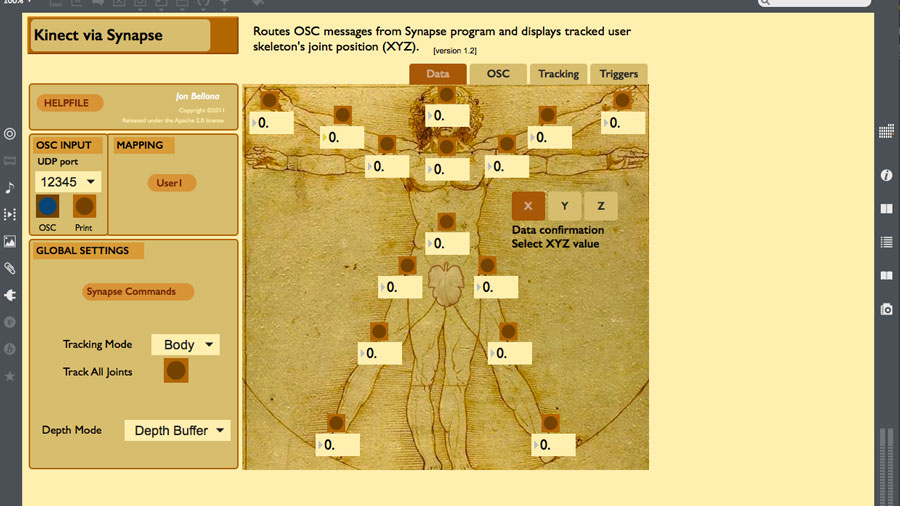

Cit wrote this about her work: "There are different methods to get the skeleton positions from this sensor: there's OpenKinect with Processing, documented by Daniel Shiffman and who by the way refers to a friend of mine (Tomás Lengeling) who worked on the development for Kinect V2 processing library for skeleton tracking.

In this case I worked with Synapse, an application written and distributed by Ryan Challinor that gets the input data from Kinect and sends it out as OSC messages.

I used "Kinect-Via-Synapse" an open-source interface for use with the Kinect camera, Max/MSP, and Synapse.

You can read more about her work on Kinect here.

Defining / understanding the machine

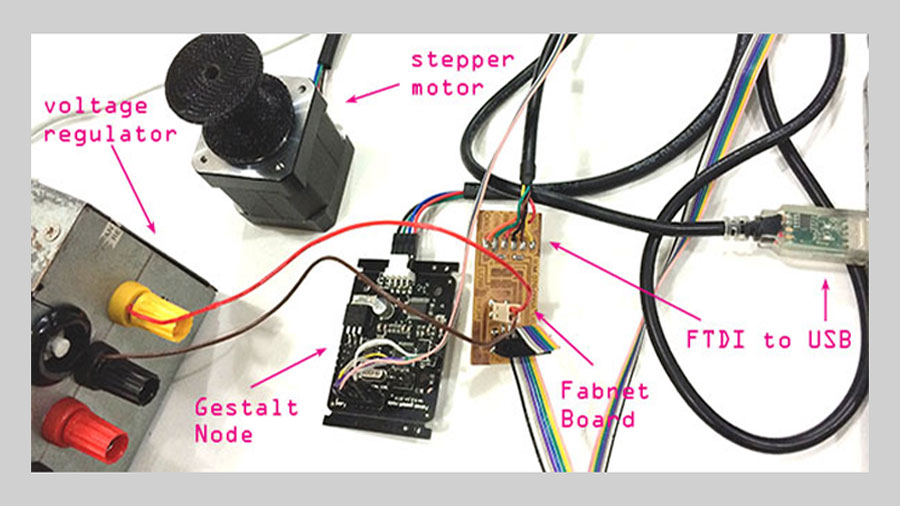

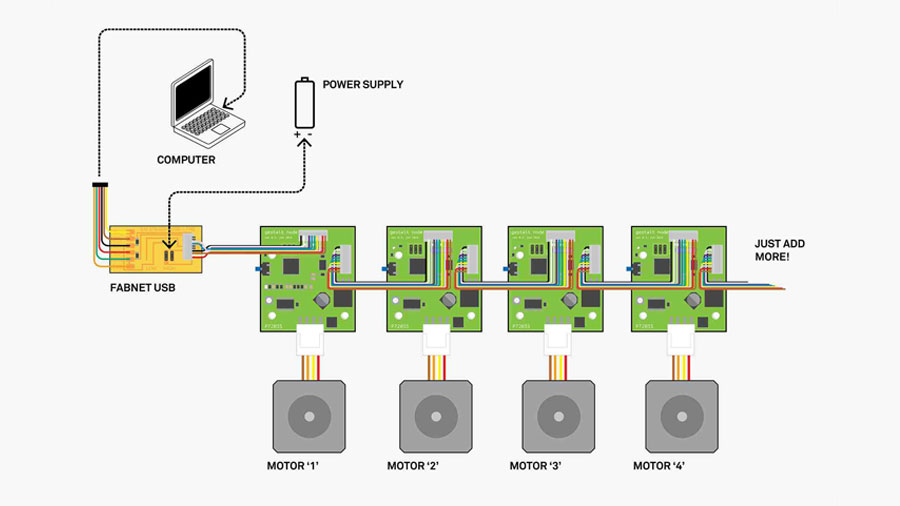

After having the mechanics done, and knowing that the stepper motors works, it was time to migrate to Gestalt Nodes. We where lost at the beginning because it was the first time we used them, but we've learned that it just requires some practice and lots of fails.

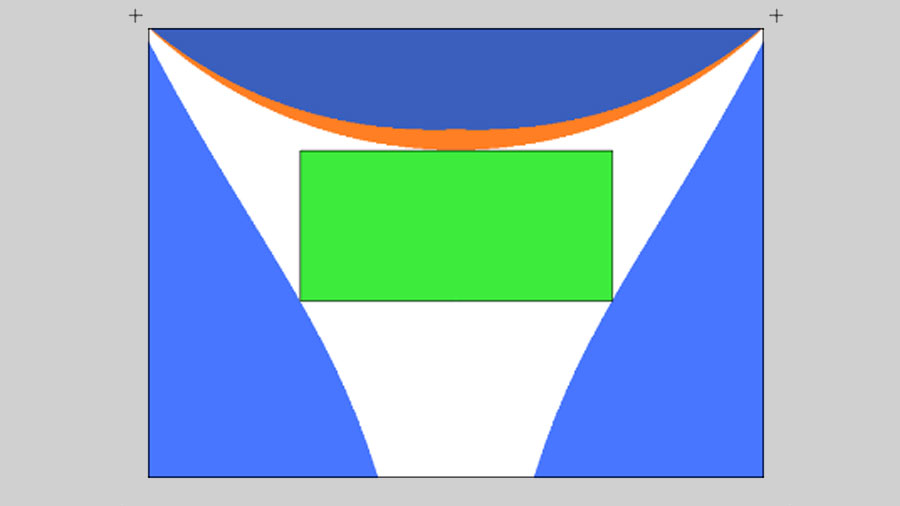

We understood that our machine, was in fact a Polar Bot, so we had to change the way we where thinking it. The most important thing is that we had to find the correct axis distances in the surface to define a suitable working area. If we make the machine work outside that area it deforms.

You can read more about it here.

For setting up the Gestalt Nodes we followed this tutorial. Also Santi (our tutor), was helping us a lot. We used Ubuntu to set it up.

Hardware

Fabnet Board (files here)

Power supply

RS-485 usb connector (connection between computer and the FabNET)

Ribbon cable (connection between nodes)

Stepper Motors (Nema 17 in our case)

After setting everything up we made our first try with the _xy_plotter.py from python examples ~\pygestalt-master\examples\machines\htmaa\

We also tried a Processing code made by Francesca from FabAcademy 2015.

Here is the code:

On Processing

PrintWriter output;

int canvas= 240;

int multiple= 3;

void setup() {

// Create a new file in the sketch directory

output = createWriter("position0.txt");

frameRate(10);

size (canvas * multiple, canvas * multiple);

ellipseMode(CENTER);

output.print("[");

}

void draw() {

if (mousePressed == true) {

ellipse(mouseX, mouseY, 10,10);

int posX= mouseX/multiple;

int posY= mouseY/multiple;

output.print("["+ posX+ "," + posY+ "]," ); // Write the coordinate to the file

}}

void keyPressed() {

output.print("[0,0]]");

output.flush(); // Writes the remaining data to the file

output.close(); // Finishes the file

exit(); // Stops the program

}

On Python

def loadmoves(): #create function that imports values from textfile

#textfile = open("\Users\Caro\Desktop\01FABACADEMY\09-MACHANICAL DESIGN\processing\code\position0.txt")

#lines = textfile.readlines()

#textfile.close() #close file or it ll be messy!

#other way of doing the same thing

#with open("\Users\Caro\Desktop\01FABACADEMY\09-MACHANICAL DESIGN\processing\code\position0.txt") as textfile:

# lines = filepath.readlines()

textfile = open('\Users\Caro\Desktop\01FABACADEMY\09-MACHANICAL DESIGN\processing\code\position0.txt', 'r')

line = textfile.read() #read the line in one go, as a string

textfile.close()

return ast.literal_eval(line) # evaluate the string and turn it into numbers

#going from : '[[10,10],[20,20],[10,10],[0,0]]'

# to : [[10, 10], [20, 20], [10, 10], [0, 0]]

The next thing we tried was using Inkscape GCode Tools. It's a very useful plugin that you can use to generate GCode for any project you develop.

Process

First you set the size of your working Area.

Draw your object and convert it to paths.

Set the Orientation Points (X,Y,Z)

Set the tool (You have to set it even if you are not using any of the tools provided by the program, we've choosen graffiti)

Then go to Graffiti Tools again and choose Graffiti.

Then do path to Gcode. Remember to set the name of your file, check the units and change the post-processor to round all values to 4 digits.

And finally, go to Path to Gcode tab and hit apply, the program will generate a Gcode file in the path you've selected earlier.

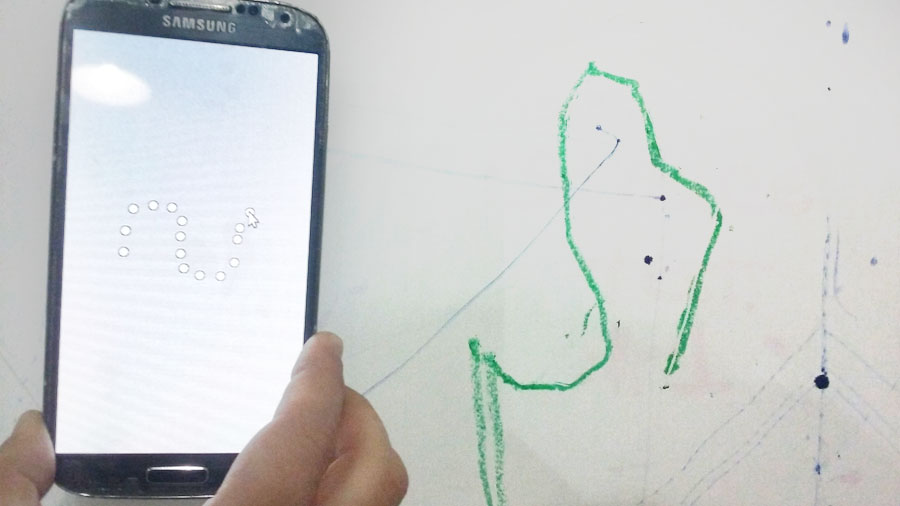

And this is the result! After two weeks of learning, success an failure, we manage to control what we draw with the machine.

Next step is implementing Kinect; Cit managed to record data from the movement, but we still need to feed it automatically / real time to the machine, that's the next step.

Also we need to improve some mechanical parts, for example we need to substitute the fishing rod with a dented belt for example, to have more stability and precision.