Just when you thought it was safe

This weeks course is on 3D scanning and printing. I'd had some (read: not much) experience in 3D printing but 3D scanning was a bit of gray area. I'd seen a demonstration or two and wasn't impressed and after this week, I'm even less impressed; however, this could potentially be HUGH, HUGH, HUGH!!!! Read more below.

Lots of hardware and software

Our lab is really lucky to have a lot of hardware and software. We started our tour with Structure Sensor to captures surfaces and is integrated with the Apple ecosystem. Then we looked at a laser scanning system from Zmorph called Voxelizer that captures slices which was very promising in terms of the definition of the surface. Then we went on to acquire some data for 3D printing. My interest was in grabbing a scan of my wrist to custom design a bracelet.

But nothing that really captures the moment

For this first part of our investigations, we spend time acquiring different 3D scans. We used various machines but they all proved difficult to use from the perspective of acquiring detailed surface scans. None of the scanners we used created anything more then a blob of the what looks like the scanned object covered in foam. It is interesting to note that many 3D scans of people look incredibly realistic but this is due to the image overlay on the surface that tricks the eyes into thinking actual detail is being presented. Remove the photo layer and the scan doesn't look like anything more then a rough outline of the individual. The result was identical for nearly all the objects scanned. To arrive at anything even remotely resembling a real object, a significant amount of post-processing on the captured mesh would be necessary. The photo shows the raw surface data and the same data with the image overlay (images were generated using Meshlab since it allows managing layers).

Creating the real object

After scanning and getting the raw data, the file was opened in Meshmixer to correct the size and mesh errors. It was exported to stl format and then loaded into the Repetier-Host and printed on the Stream printer. Standard parameters were used to print the hand. Total print time was approximately 45 minutes.

But now it gets interesting

One thing that greatly interested me was how to integrate the results of a 3D scan into a "real" design. We took the scan of my wrist/hand and made some corrections using Structure Sensor and then opened in the file in Solidworks. There it was just a matter of working around the imported data using SW objects. What was very interesting is that although SW imports the surface data, it is not capable of recognizing it as a surface. Therefore it is not able to, for instance, calculate the intersection between the surface and the object. This means that creating a structure that follows a surface based on geometry is not possible and 'fitting' is an empirical effort based on following the surface with lines and curves. This is not detrimental for the bracelet since it need not be form fitting; however, if it were necessary to build a precise 'fit' based on a 3D scan this doesn't seem possible.

Getting Real

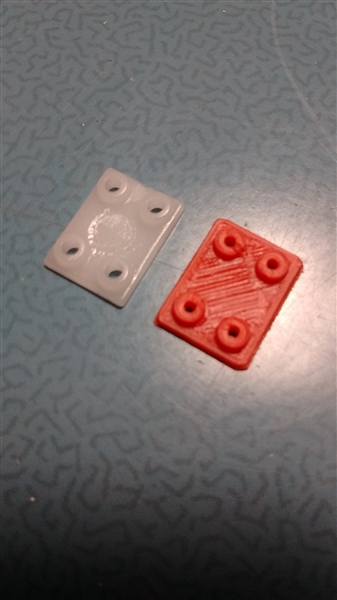

The final experiment I was interested in was actually building a technically correct object. By this, I mean an object that has a real-world usability so it needs to be of precise measures. This is in-line with Neil's request to try and find the limits of the machine. The top photo shows the original object in white and the red is the reproduced object. No effort was made to optimize the surface of the piece and this is a space for further investigation. One advantage of this work is off-the-shelf components can be improved by substituting more robust plastics.

The model was built on measurements were performed using digital calipers and then transferred to SW. This was the first measured design using SW and there will more to come in the final project.