My final project

If I look at the existing computer-human interfaces and the ones that will come in the future, they strike me as overwhelmingly occupying one location: the will be on the user’s head. Even though, they might be different in essence. One might argue of course that the human head (as for other mammals) is the location of a high number of senses. That’s essentially correct, and my feeling is that our heads will be very crowded with devices very soon.

For example, we might have a standard pair of headphones, or even better a headset with a microphone.

Virtual reality (henceforth VR) headsets will soon be upon us. There are already consumer products available, like the HTC Vive and the Oculus Rif. And of course unless they accommodate an aforementioned headset (for audio), you will have to add one of these.

A similar example is a Augmented Reality (henceforth AR) headset, such as the Microsoft Hololens or the future Magic Leap headset. Not much is known about the last one (and the firm called the experience Mixed Reality instead of AR), but it is likely to be a headset. For AR, the concurrent use of an audio headset that covers the ear might be unlikely, but you will need to have audio input at least.

A more baroque device is/will be a Electro-encephalogram input “helmet”. Some firms like Emotiv Epoch already have existing product. An open-source equivalent is the OpenEEG board, sensors and helmet… In the Emotiv product, sensors are also applied to the forehead in order to detect muscular movements of facial expressions.

My humble opinion is that a device that “understands” where you pay attention is the logical step. For example, in the visual modality, eye-tracking devices are commonly used for research. But, in my own experience as – for example – a video editor, I would love that my workstation knows when I am toggling from one tab (or window) to the next in Premiere (or any other software). It always puzzled me that – although you have keyboard shortcuts – the switching my attention from one tab to another cannot be mapped into the app.

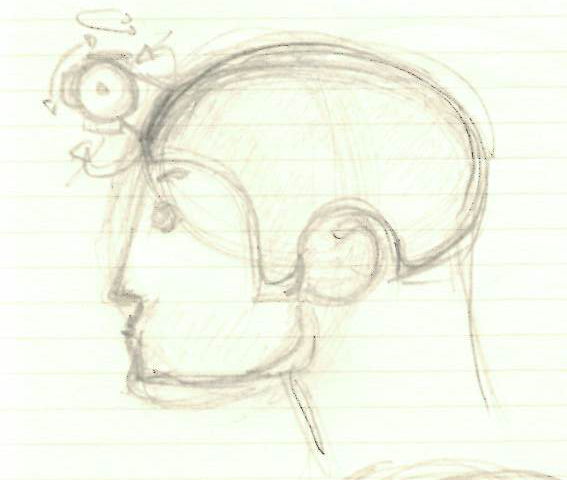

Many features on our mobile devices must me added now to the pictures. So we will have the hardware that we currently carry in our pocket on our head. Pictures that if you will: I would have on my head my EEG helmet, a pair of headphones, a microphone in front of my face (to record a clean audio), a AR headset glued to my head, an eye-tracking device in front of my eyes, maybe some kinetic sensors (balance, etc.), a GPS, some controls on the side for each of them (probably). We might have also a camera – as we have on cellular phone – to take picture or document the work we do, and some other minute things like batteries, antennae, etc. Our heads will become crowded indeed. Wouldn’t be nice to have all (or nearly all) in one device, one headset?

The other element that strikes me is that if these all-in-one headsets are indeed our new indispensible worktool, then professionals will wear them for long stretches of time. Architects, designers, graphic artists of any kind, medical doctors (for assisted surgery and diagnostics) will be wearing one of these during their work, which spans 8 or 10 hours sometimes more. If so, the headset will need to be highly adjustable and/or personally customized for user’s head, with all the idiosyncrasies of the shape, adjusting for hair growth.So, the idea for my final project is to make a headset. Of course such a headset goes far beyond the scope of a Fablab first timer like myself. But I would like to think of this project as a framework any future headsets and modules that would be added or refined over time.

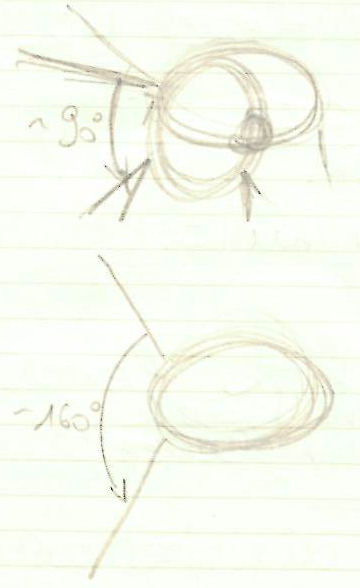

I went through different particular instantiations of that headset idea. I thought of an anamorphic projector headset. My impression is that there are still a lot of improvement on the resolution of displays to be done, so I thought of something where the display would not appear on a glass directly in front of the eye(s), but would indirect light.

The idea would be to have a simple projector mounted on a headset and wherever we turn the head, it would project on the wall of the room in front of us, a straight text. In order to do this, we would need the headset to detect arrangement of the wall in front of us, map it and the projector would adjust the projection (anamorphosis) in front of us.

An refinement of that idea would be to have the projector mounted a 5-axis arm and the headset would just detect the position of the user and the arrangement of the walls.

This might still be out of reach for my current set of skills, so I thought of something else, related but different. I worked in a Film Archives and we used to handle film reels. All of these reels were in individual boxes and had a label with a barcode on it. I remember that colleagues and myself would have a handheld barcode reader. But when you have to handle boxes, having a reader in one hand is not that great. Of course, usually we would put the boxes on a small rolling cart and scan them here. But I thought that being able to scan items (film reels or any other items) without using one’s hand would be interesting.

Some design constraints come immediately to mind. For example, the headset capabilities need to be modular, so that changing one module for another is quite trivial and simple. It needs to be elegant, as the device will be placed on the head and we humans use facial expressions to relate to one another. So the device needs to leave most of the facial features unencumbered. Last thing is that my impression is that heat exchange between the head and the environment is quite complex and is a large part of the more general heat exchange between the body and the environment. So we will need to be mindful of this.